Labeling Resource Allocation

Labeling Resource Allocation is Resource Allocation applied to data labeling jobs. In the context of the Alectio Marketplace, the problem consists in routing the labeling tasks requested by the users of the Alectio platform toward the “best” Alectio labeling partner, in real time.

In our case, the objective function seeks to find the best suited partner for a customer based on his/her use case and the relative importance they attribute to three factors: the financial cost of the job, the time necessary for the task to be completed, and the accuracy of the results. It also takes into consideration the fairness of the salary received by the annotators, in the sense that labeling partners that are more generous with their workforce are more likely to be attributed jobs, as well as the individual preferences of the annotators.

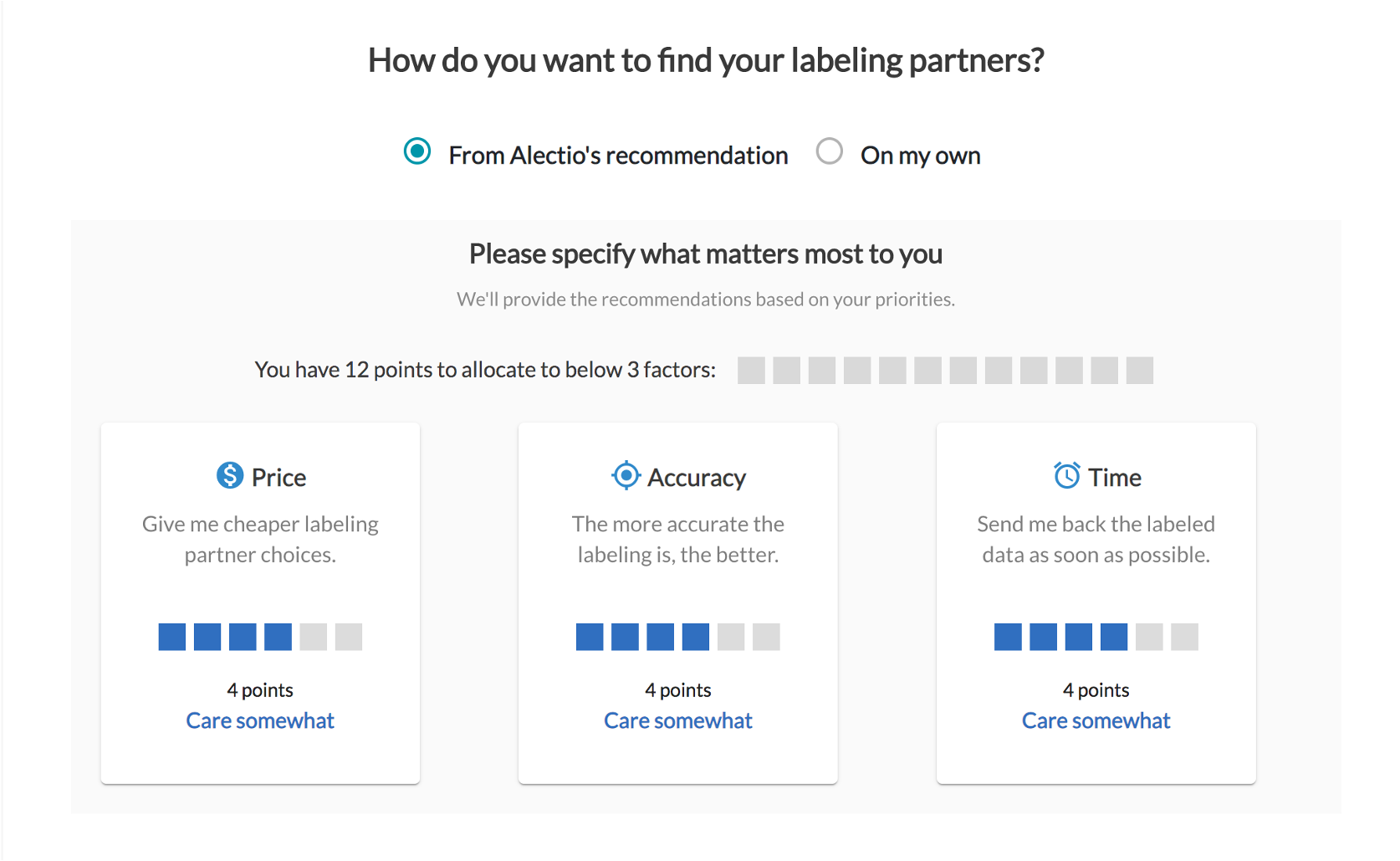

Preference-driven recommendation

In order to provide the best recommendations possible and account for every unique situation, the user is asked to provide its priorities explicitly through a point allocation system. The user is asked to distribute a total of 12 points across three priorities: price, time and accuracy. If the user chooses to put 6 points for price and 6 for accuracy, it means they are very willing to compromise on time; if they put 4 points for each priority, they’re expecting the best trade-off across all three.

Real-time availability assessment

When the user chooses to use the marketplace, Alectio selects all partners capable of providing the service and sends API requests to each one of the suitable ones to know how many annotators are immediately available. This info paired with the average completion time per record allows us to predict the time the job would take. The partners that are shown on the UI are all capable of getting started with the job immediately.

Historical data

Alectio is recording and analyzing the past performance for all jobs and has an accurate understanding of the weaknesses and strengths of each partner, per data type and use case. We know exactly who is fast, who is accurate, and keep track of all metrics over time. We even blacklist partners if they fail to deliver on specific tasks and only put them back in the system once they address their issues.

Feedback loop

Thanks to our label auditing algorithms and the analysis of the actions performed by the users after the results of their labeling tasks become available in the Human-in-the-Loop module, Alectio can measure with precision the accuracy of the job. If a user decides to send back 40 records of a 1000-record job to another labeling partner for re-annotation, and the results are different for 30 of those records, we now know that the accuracy of the job was close to 97%, providing a pragmatic measurement for performance.

Besides informing the user on the average performance of a partner for a specific use case, this technique also benefits labeling partners who have access to a comprehensive dashboard with their own statistics and performance relatively to their competitors, so that they can improve their processes and train their annotators accordingly.