Featured photo by Joshua Earle on Unsplash

What a time to be alive, if you are a Technology enthusiast. If our great-grand-parents were to travel to 2022, they would surely feel like they just landed in the middle of the shooting of a Sci-Fi movie: cars that drive themselves, mini driverless helicopters delivering groceries, TVs that switch on on a voice command and cashierless checkouts. Even the voice at the end of the line when you call to complain about your delivery being late isn’t the one of a human – but they probably wouldn’t believe us if we told them.

AI applications are booming all around us, and a month doesn’t go by without the mention in the press of ML research reaching a new milestone. How would you feel then, if I told you, we came eerily close to another AI Winter?

What is an AI Winter?

But before telling you the full story about our close encounter with the next AI Winter, let me start by explaining what an AI Winter is.

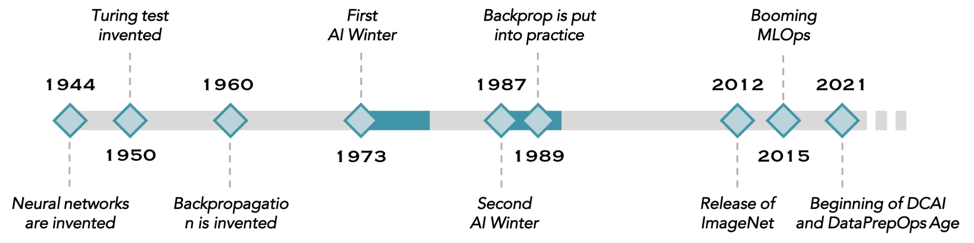

To understand the concept of AI Winter, it is necessary to dig into the History of AI and Machine Learning. Most people don’t know this, but while the productization of real-life Machine Learning products such as voice assistants and facial recognition is a fairly recent phenomenon, the theory on top of which those applications are built is fairly old. The backpropagation algorithm, for example, was invented more than 50 years ago, and the first computation model for neural networks was proposed for the first time almost 80 years back.

Then, you will ask, how come people didn’t have access to voice recognition technology in the seventies? The answer is that the previous generations of ML researchers tried really hard to turn their theoretical findings into real-life applications, but faced challenges significant enough that the general public lost interest and funding for AI research dried out. This happened over and over again, sometimes in such a profound way that it took years for people to regain excitement for the field, and sometimes in a less dramatic manner. Those periods are referred to as “AI Winters”, they have been consistently punctuating the History of AI.

The History of AI Winters

The AI Winter of 1973-1974

In 1973, after two decades of disappointing progress in AI (and specifically in Machine Translation), the UK Parliament tasked a renowned mathematician by the name of James Lighthill to analyze the state of AI research. The results of Lighthill’s research was not encouraging to say the least: it essentially blamed the AI community for not delivering on its promises, a feeling that many researchers were sharing back them. Many blamed Marvin Minsky for his unrealistic claims that Artificial General Intelligence was around the corner, and deplored the lack of accountability in this matter. The output of this study would later be remembered as the Lighthill Report, and was made known to the world through the BBC Controversy Series (1973).

The release of this report also coincided with DARPA shifting its focus on “mission-oriented”, actionable research which led many AI research groups to lose critical funding. The failure of the SUR program at Carnegie Mellon was the last blow that convinced the world that AI was overhyped.

The Second AI Winter

After the First AI Winter had essentially entirely dried up governmental funding for AI, researchers had no choice but to direct their attention to the development of commercial applications for AI. The goal was no longer to build an AI capable of general, human-like intelligence, but instead focus on building “expert systems” capable of performing specific tasks at the human level. Panels of AI experts were discussing how to prevent the next AI Winter and warned researchers to be more pragmatic and avoid making unrealistic claims. Slowly, even the Government started regaining interest, and by the mid-80s, AI funding was flowing again.

And soon, a new industry started focusing on providing hardware capabilities to support this new research. Lisp machines, which had been developed in the early 70s, were manufactured and distributed by multiple companies. But at a $70,000 price point (that’s $200,000 in today’s money), their sales were abysmal, and the entire industry collapsed in a matter of a few years, as cheaper alternatives by IBM and Apple were made available on the market, bringing the entire AI industry down with it. And while AI research was still happening, it was usually under the cover of another industry which would incorporate those advances quietly into their larger system, and AI had such a bad press that until the mid 2000s, researchers were referring to their work by other niche terms that we are used to today; that’s how the terms Machine Learning, Data Mining or Intelligent Systems became more mainstream, and why many are so weary to even use the term AI in public.

Are we overdue for the next AI Winter?

Fast forward to 2022. It’s been decades since the last AI Winter. Most of us only know from this painful history what got passed through collective memory: remnants of lessons learnt by the previous generation of research such as the wisdom to tackle Weak AI before jumping into Strong AI, and not to use the term AI without careful consideration for fear of being perceived as a SciFi aficionado. Of course, we are dealing with the Minskys of the day, but while their voices are getting sometimes a bit too loud for comfort as a result of some of the most recent developments in Computer Vision and NLP, it seems that the industry has learned its lesson.

And yet, unbeknownst to most, we just barely avoided an AI Winter, and are not out of the woods yet.

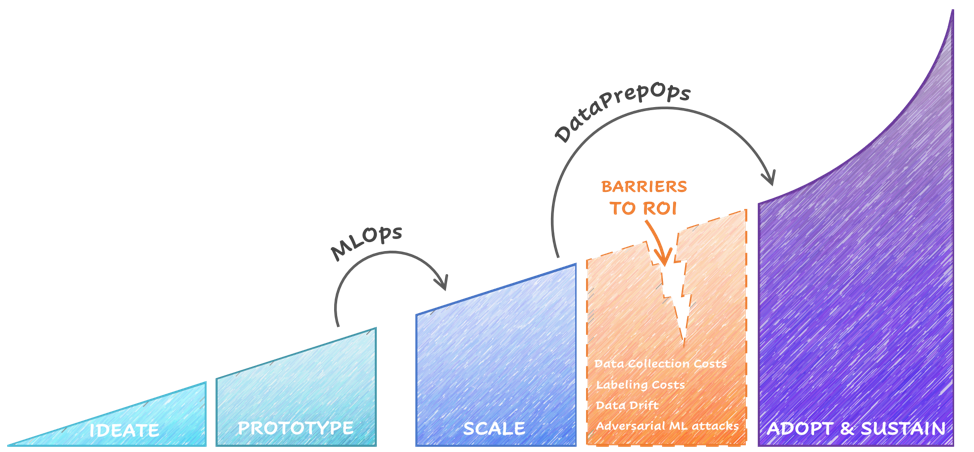

2012 marked a turning point in Deep Learning research. After decades of fundamental research, Deep Learning suddenly became a vehicle for the creation of smart applications, particularly thanks to the access to more powerful hardware, and the facilitation of data labeling. Within months, companies started hiring data scientists in spades, and data scientist was officially declared the sexiest job of the 21 century. But by the middle of the decade, the frenzy came to a halt: most companies started complaining about the fact that in spite of large monetary investments in AI (sorry, ML), initiatives were not bringing a return on investment because the data scientists they hired were incapable of deploying their own model to production. Experts blamed it on several factors: improper AI curricula at most Universities, inappropriate internal support of data scientists, unrealistic expectations from leaders. Rings a bell? If not, it absolutely should. Then why didn’t the industry go into yet another AI Winter?

We undeniably came really close, but by 2020, a new industry had seen the light of day: the MLOps industry. Industry leaders had recognized the need to simplify and automate the pain points associated with the creation and operationalization of a Machine Learning model, and given how urgent it was to help organizations ship their models, most MLOps companies focused on developing solutions to deploy and maintain models effortlessly. Docker, Kubernetes, Airflow: all these technologies played an instrumental role in saving us from another AI Winter. Whether they were buying turn-key solutions from MLOps providers, or following the best practices that had been developed by them to create their own systems, companies quickly became capable of deploying and serving models.

MLOps did save us from an AI Winter at the end of the 2010s… but unfortunately, everything points to the fact that this new Winter was postponed rather than canceled.

Crossing the AI Cost Chasm

2021: almost half of Americans use a voice assistant system. AI funding has raised to a whopping $80B worldwide. An AI strategy is a must-have for pretty much any company. And yet, in spite of it all, AI in practice remains a challenge because of one major issue: ML requires Big Data, causing a concerning hole in the budgets of all but a handful of companies, and companies that provide solutions to manage data at scale (from Cloud Compute to Hardware companies), realizing their hedge, took advantage of the situation to deepen the dependence of ML-first companies on their services.

While it had become a lot easier to deploy and operate models at scale, maintaining them at scale remained close to impossible for small- and medium-sized companies. This is what we at Alectio call the AI Cost Chasm.

But it looks like Technology, just like Life, always finds a way, and while most of the industry is experiencing growing pains, the ML Community started shifting its focus away from Big Data and towards “Smart Data”: that’s what Andrew Ng coined as “Data-Centric AI”. The value proposition of Data-Centric AI is elegantly simple: rather than brute-forcing massive amounts of training data into models as most AI leaders had advocated for the past fifteen years, it was time to “model” and tune our training datasets just like we do for models.

Without the fast adoption industry-wide of data-centricity (in other terms, as long as ML scientists will keep favoring a brute-force approach to ML) and the creation of MLOps tools to support the operationalization of Data-Centric Machine Learning, we might be on the verge of the next AI Winter. This is exactly what DataPrepOps, a portmanteau term which I coined in 2021 to refer to the need to inject new technologies and best practices into Data Preparation and the overall operationalization of Data-Centric AI, is all about.

Ready to join us in preventing the next AI Winter? Just send us an email and let’s go save our industry together.

************************************

0 Comments